OrdinalEncoder: When Order Matters in Categorical Data

In our previous post, we explored one-hot encoding, which creates binary columns for each category. But what if your categorical variable has a natural order, or you need a more compact representation? That’s where ordinal encoding comes in.

Today, we’ll dive into the OrdinalEncoder from the category_encoders library - a simple yet powerful tool for handling categorical data.

The Concept: Mapping Categories to Numbers

Ordinal encoding is conceptually simpler than one-hot encoding. Instead of creating multiple binary columns, it assigns a single integer to each category. For example:

| Original | Encoded |

|---|---|

| small | 0 |

| medium | 1 |

| large | 2 |

This approach is particularly useful when:

- Your categories have a natural order (like small, medium, large)

- You want to preserve this ordering information

- You need to reduce dimensionality compared to one-hot encoding

How Ordinal Encoding Works

The process is straightforward:

- Identify all unique categories in your data

- Assign a unique integer to each category (either automatically or manually)

- Replace each category with its corresponding integer

The mapping can be determined in several ways:

- Alphabetical order

- Frequency order (most common to least common)

- Custom order specified by the user

- Random assignment

Implementation with category_encoders

The category_encoders library provides a robust implementation of ordinal encoding:

import pandas as pd

import category_encoders as ce

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# Sample data

data = pd.DataFrame({

'color': ['red', 'blue', 'green', 'red', 'green', 'blue', 'red', 'green'],

'size': ['small', 'medium', 'large', 'medium', 'small', 'large', 'medium', 'small'],

'price': [10.5, 15.0, 20.0, 11.0, 19.5, 22.5, 12.0, 18.5]

})

# Initialize the encoder

encoder = ce.OrdinalEncoder(cols=['color', 'size'])

# Fit and transform

encoded_data = encoder.fit_transform(data)

print(encoded_data)

# Get the mapping

mapping = encoder.mapping

print("Mapping:")

for m in mapping:

print(f"{m['col']} mapping: {m['mapping']}")

This produces the following output:

color size price

0 1 1 10.5

1 2 2 15.0

2 3 3 20.0

3 1 2 11.0

4 3 1 19.5

5 2 3 22.5

6 1 2 12.0

7 3 1 18.5

Mapping:

color mapping: red 1

blue 2

green 3

NaN -2

dtype: int64

size mapping: small 1

medium 2

large 3

NaN -2

dtype: int64

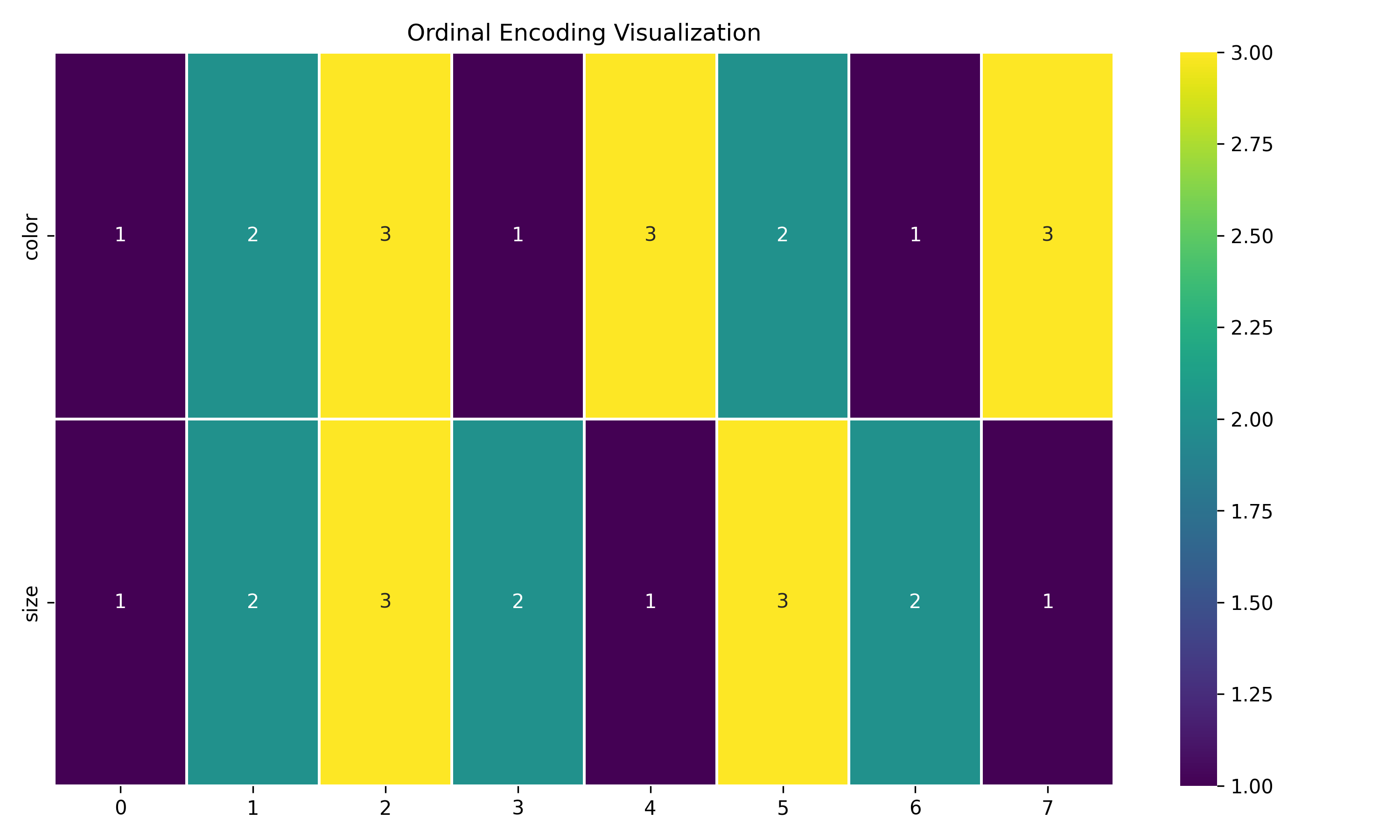

We can visualize this encoding with a heatmap:

# Visualize the encoding

plt.figure(figsize=(10, 6))

encoded_cols = ['color', 'size']

sns.heatmap(encoded_data[encoded_cols].T, cmap='viridis', cbar=True,

linewidths=1, annot=True, fmt='g')

plt.title('Ordinal Encoding Visualization')

plt.tight_layout()

Custom Mapping

One of the most powerful features of OrdinalEncoder is the ability to specify custom mappings:

# Custom mapping example

size_mapping = {'small': 1, 'medium': 2, 'large': 3}

color_mapping = {'red': 10, 'green': 20, 'blue': 30}

# Initialize encoder with custom mapping

encoder_custom = ce.OrdinalEncoder(

cols=['color', 'size'],

mapping=[

{'col': 'size', 'mapping': size_mapping},

{'col': 'color', 'mapping': color_mapping}

]

)

# Fit and transform

encoded_data_custom = encoder_custom.fit_transform(data)

print("Encoded data with custom mapping:")

print(encoded_data_custom)

Output:

color size price

0 10 1 10.5

1 30 2 15.0

2 20 3 20.0

3 10 2 11.0

4 20 1 19.5

5 30 3 22.5

6 10 2 12.0

7 20 1 18.5

This is particularly important when your categories have a natural ordering that you want to preserve.

Advanced Features in category_encoders’ OrdinalEncoder

The OrdinalEncoder in category_encoders offers several advantages over simpler implementations:

1. Handling unknown categories

# Handle new categories in test data that weren't in training data

encoder = ce.OrdinalEncoder(handle_unknown='value')

2. Return mappings

# Get the mapping from original categories to encoded values

mappings = encoder.category_mapping

3. Inverse transform

# Convert back to original representation

original_data = encoder.inverse_transform(encoded_data)

4. Working with pandas DataFrames

# Direct support for pandas DataFrames

encoded_df = encoder.fit_transform(df)

Real-World Example: Predicting Customer Churn

Let’s use OrdinalEncoder in a practical scenario - predicting customer churn:

import pandas as pd

import category_encoders as ce

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report

# Sample customer data

customers = pd.DataFrame({

'subscription_length': ['1-month', '6-month', '12-month', '1-month', '12-month', ...],

'usage_level': ['low', 'medium', 'high', 'low', 'high', ...],

'support_calls': [5, 1, 0, 10, 2, ...],

'payment_delay': [10, 0, 0, 15, 0, ...],

'churn': [1, 0, 0, 1, 0, ...] # Target variable: 1 = churned, 0 = retained

})

# Define custom mappings for ordinal variables

mapping = [

{'col': 'subscription_length', 'mapping': {'1-month': 0, '6-month': 1, '12-month': 2}},

{'col': 'usage_level', 'mapping': {'low': 0, 'medium': 1, 'high': 2}}

]

# Initialize the encoder

encoder = ce.OrdinalEncoder(cols=['subscription_length', 'usage_level'], mapping=mapping)

# Split the data

X = customers.drop('churn', axis=1)

y = customers['churn']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Fit the encoder on training data only

X_train_encoded = encoder.fit_transform(X_train)

X_test_encoded = encoder.transform(X_test)

# Train a model

model = RandomForestClassifier(random_state=42)

model.fit(X_train_encoded, y_train)

# Make predictions

y_pred = model.predict(X_test_encoded)

# Evaluate

print(classification_report(y_test, y_pred))

# Feature importance

feature_importance = pd.DataFrame({

'feature': X_train_encoded.columns,

'importance': model.feature_importances_

}).sort_values('importance', ascending=False)

print("\nFeature Importance:")

print(feature_importance)

This example demonstrates how ordinal encoding can be used effectively with tree-based models, preserving the natural ordering of categories like subscription length and usage level.

When Ordinal Encoding Shines

Ordinal encoding is particularly effective in certain scenarios:

When categories have a natural order: For variables like education level (high school, bachelor’s, master’s, PhD), size (small, medium, large), or satisfaction ratings (poor, fair, good, excellent)

With tree-based models: Decision trees, random forests, and gradient boosting machines can often work well with ordinal encoding

For dimensionality reduction: When dealing with high-cardinality categorical variables, ordinal encoding creates just one feature instead of many

As an input to other encodings: Some advanced encoding techniques use ordinal encoding as a preprocessing step

Limitations and Considerations

While powerful, ordinal encoding has some important limitations:

Implied ordering: It imposes an order on categories, which may not be appropriate if no natural order exists

Equal spacing assumption: The numerical difference between categories is always 1, which may not reflect the true relationship

Distance distortion: For algorithms that use distance metrics (like k-means clustering), ordinal encoding can distort the true distances between categories

Arbitrary assignment: If no mapping is provided, the assignment of numbers to categories may be arbitrary

Conclusion: The Right Tool for Ordered Categories

Ordinal encoding offers a simple yet effective way to handle categorical data, especially when those categories have a natural order. While it’s not appropriate for every situation, it’s a valuable tool in your feature engineering toolkit.

The key to using ordinal encoding effectively is understanding whether your categorical variable has a meaningful order, and if so, ensuring that your encoding preserves that order. When used appropriately, it can significantly improve model performance while keeping your feature space manageable.

In our next post, we’ll explore BinaryEncoder, which offers a middle ground between one-hot and ordinal encoding by representing categories as binary code.

Stay in the loop

Get notified when I publish new posts. No spam, unsubscribe anytime.